New preprint on regularization properties of noise injection out!

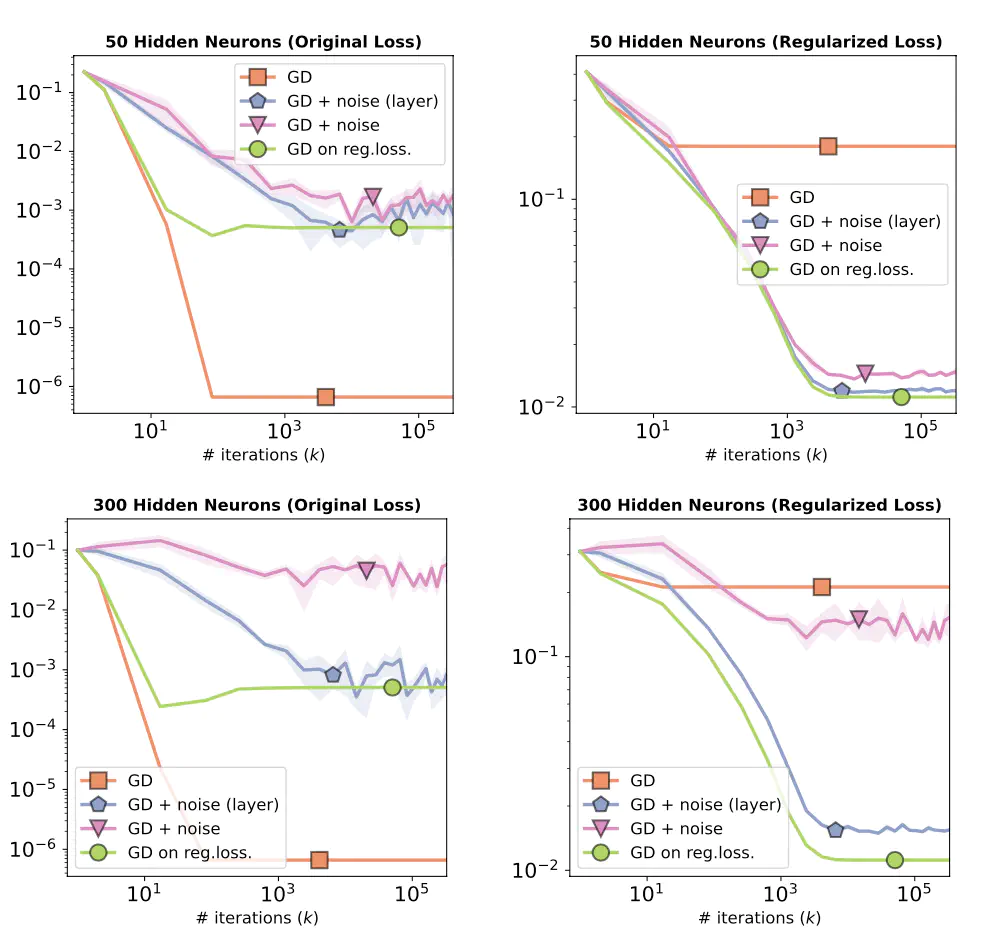

MLP with 1 hidden layer and linear activations on synthetic data. Both injecting noise layer-wise and to all weights lead to minimization of the regularized loss.

MLP with 1 hidden layer and linear activations on synthetic data. Both injecting noise layer-wise and to all weights lead to minimization of the regularized loss.

This new paper is a follow-up to our Anti-PGD paper (ICML 2022). This time we show that small perturbations induce explicit regularization, which we spell out for a few models. Many new research avenues open up from our Theorem 2.